These notes cover basic knowledge in linear algebra, statistics and probability, and machine learning, based on the CS229: Machine Learning course. While preparing for my PhD interview, I compiled these notes, deliberately omitting certain topics that seemed unlikely to be covered in the interview (which turned out to be true). Given my limited experience, there may be some errors in these notes. If you notice any mistakes or have suggestions for improvement, please feel free to email me. My contact information is available on my homepage.

References:

A special thanks to everyone involved in creating and maintaining this course, for making it open and freely accessible.

Authored by Bingkui Tong

December 19, 2024

Linear Algebra

| English | 中文 | Complementary |

|---|---|---|

| transpose | 转置 | |

| column vector | 列向量 | 一般向量认为只有一列的矩阵 |

| row vector | 行向量 | 一般用列向量的转置表示行向量 |

| product | 积 | 比如两个矩阵相乘的结果 |

| inner / dot product | 内积/点积 | 两个向量相乘的结果(常数) |

| coefficient | 系数 | |

| associative | 结合律 | (AB)C = A(BC) |

| distributive | 分离律 | A(B+C) = AB + AC |

| commutative | 交换律 | AB = BA (For matrix multiplication generally no) |

| identity matrix | 单位矩阵 | 对角线全为1,非对角线为0 |

| diagonal matrix | 对角矩阵 | 除了对角线全为0 |

| symmetric | 对称的 (only for square matrix) | |

| anti-symmetric | 反对称的 (only for square matrix) | |

| For any matric The first matrix on the right is symmetric and the second is anti-symmetric | ||

| trace | 迹 (only for square matrix) | 对角线元素之和 |

| 对于方阵AB, | ||

| square matrix | 方阵 | |

| norm | 范数 (only for vector) | 欧几里得范数(Euclidean or |

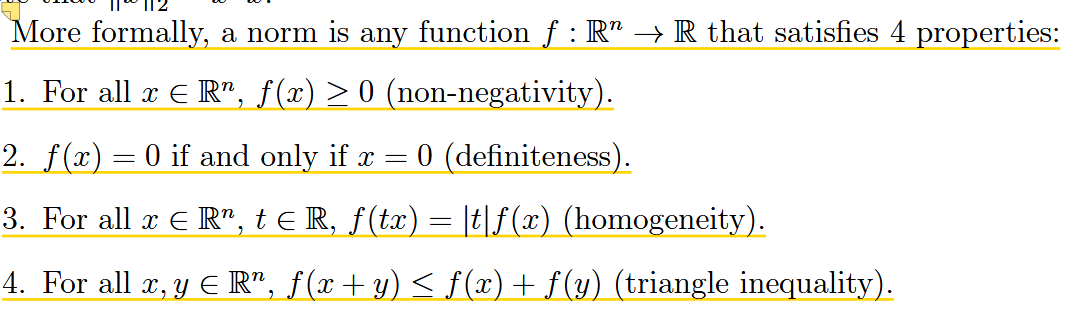

The four properties a norm should be satisfied: non-negativity, definiteness, homogeneity, triangle inequality | ||

| linear independent | 线性无关性 | For a set of vectors { |

| Linear dependent: | ||

| rank | 秩 | |

| row rank | 行秩 | The size of largest subset of rows of A that constitute a linearly independent set The number of linearly independent rows |

| column rank | 列秩 | The size of largest subset of columns of A that constitute a linearly independent set The number of linearly independent columns |

| For | ||

| inverse | 逆 (only for square matrix) | The inverse of Not all matrices have inverses |

| invertible/non-singular | 可逆/非奇异 | A must be full rank to have an inverse |

| non-invertible/singular | 不可逆/奇异 | |

| orthogonal matrix | 正交矩阵 | Vectors: x and y is orthogonal if Matrix: a square matrix |

| normalized | 归一化 | Usually we use orthogonal to describe the square matrix |

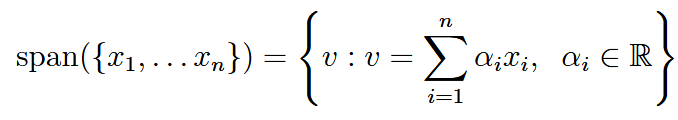

| span | 生成子空间/张成 | The span of a set of vectors is the set of all vectors that can be expressed as a linear combination of  |

| if | ||

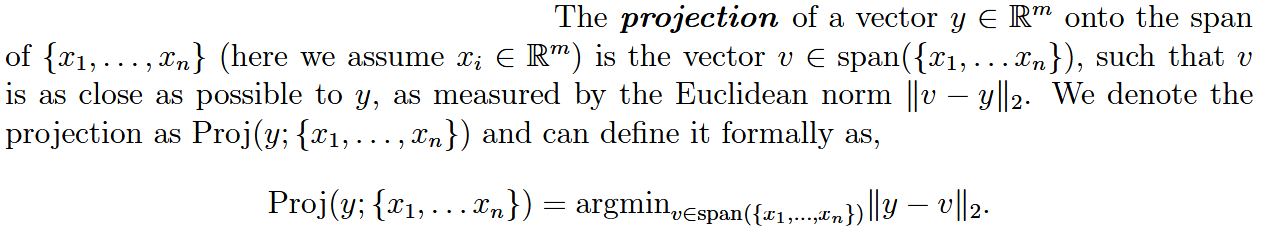

| projection | 投影 | 一个向量到另外一组向量的投影就等于这一组向量的生成子空间中与这个向量欧几里得范数的最小值 |

| range/columnspace | 列空间/值空间 | |

| nullspace | 零空间 | nullspace of a matrix means a set of vectors equal to 0 when multiplied by A. |

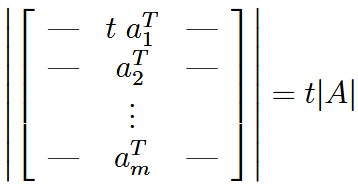

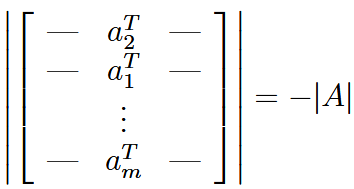

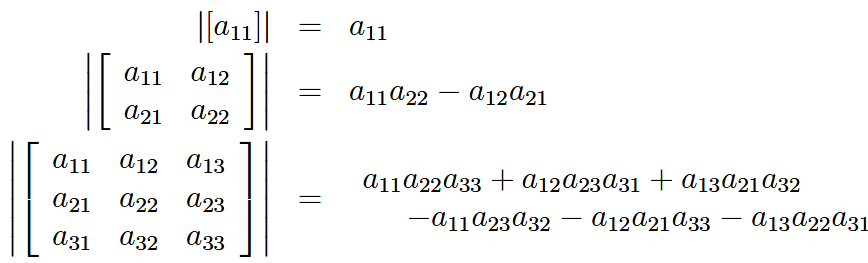

| determinant | 行列式 |  |

some properties:  | ||

| full rank: determinant is not 0 / full rank (for square matrix) | ||

| Laplace展开求行列式: 这里可以任选展开一行或者一列 | ||

| ||

| classical adjoint | (经典)伴随矩阵 | |

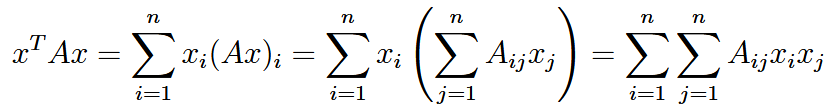

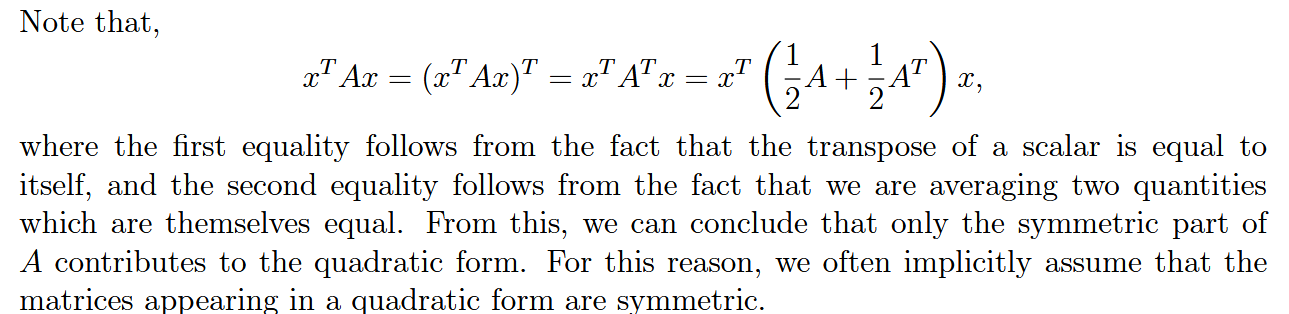

| quadratic form | 二次形式 | the scalar value  |

Usually we explicitly assume that the matrix appearing in quadratic form is symmetric 这里 | ||

| positive definite (PD) | 正定 | For symmetric matrix Usually denoted A as |

| positive semidefinite (PSD) | 半正定 | for all vectors (not all non-zero vectors) |

| negative definite (ND) | 负定 | For symmetric matrix Usually denoted A as |

| negative semidefinite (NSD) | 半负定 | for all vectors (not all non-zero vectors) |

| indefinite | 不定 | neither positive semidefinite nor negative semidefinite |

| ||

| positive/negative definite matrices are full rank, hence, invertible | ||

| gram matrix | 格拉姆矩阵 |  |

| eigenvalue | 特征值 | |

| eigenvector | 特征向量 | Intuitively, this definition means that multiplying |

| Usually we assume the eigenvector is normalized because if | ||

| Only when | ||

| Some properties: 逆矩阵的特征值是原矩阵的倒数 The eigenvalues of a diagonal matrix are just the diagonal entries | ||

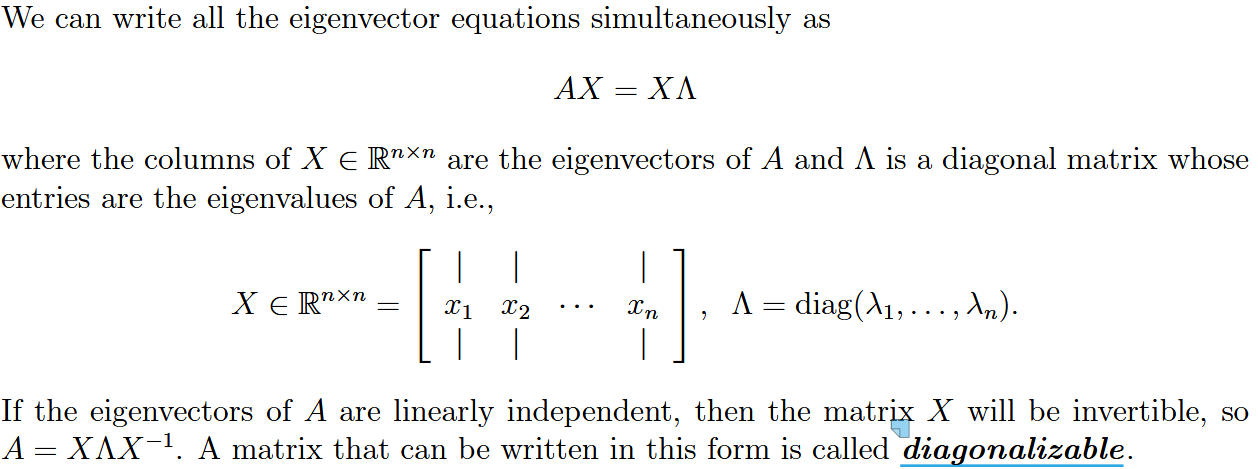

| diagonalizable | 可对角化 |  |

| 可逆矩阵的特征值数量为n(维度),不可逆矩阵行列式为0,因为有特征值为0 | ||

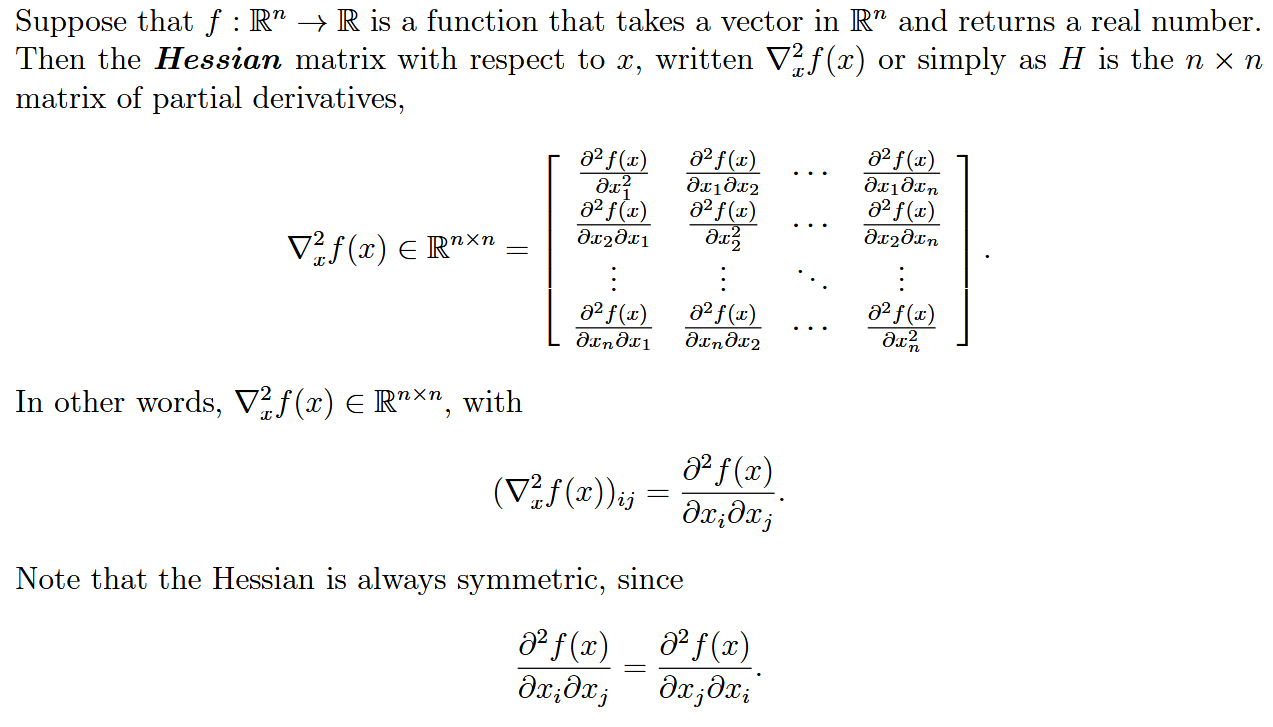

| Hessian | Hessian 矩阵 |  就是个二阶导矩阵 |

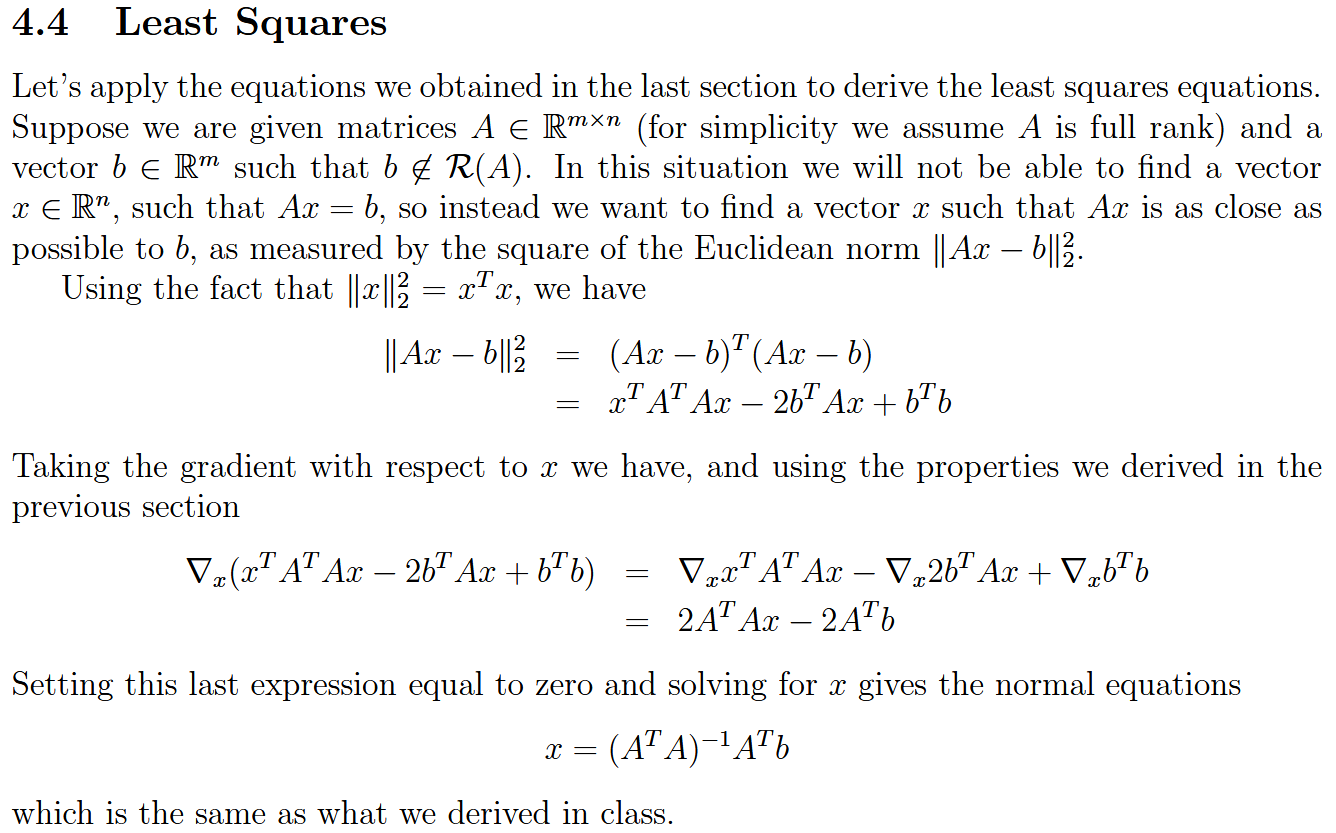

| least square | 最小二乘法 |    使用了这两个公式 |

Statistics and Probability

| English | 中文 | Complementary |

|---|---|---|

| sample space | 样本空间 | |

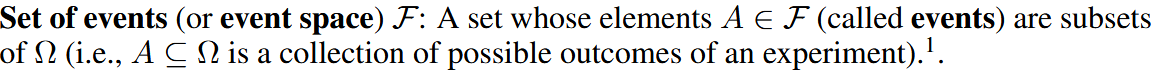

| set of events (event space) | 事件集(事件空间) |  properties: |

| conditional probability | 条件概率 | |

| independent | 独立 | |

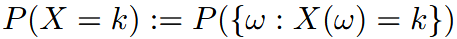

| random variables | 随机变量 | A function: |

| discrete random variables | 离散随机变量 |  |

| continuous random variable | 连续随机变量 |  |

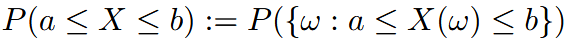

| cumulative distribution function | 累计分布函数 | properties:  |

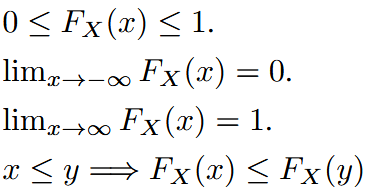

| probability mass function (for discrete random variables) | 概率质量函数 | properties:  |

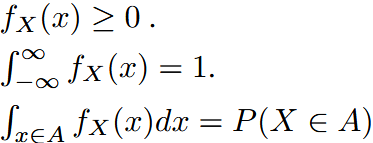

| probability density function (for continuous random variable) | 概率密度函数 | properties:  |

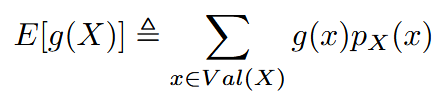

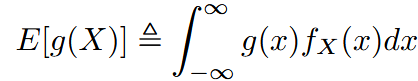

| expectation | 期望(这里说的是概率期望) | For discrete random variable: For continuous random variable:  |

| this is kind of “weighted average” of the | ||

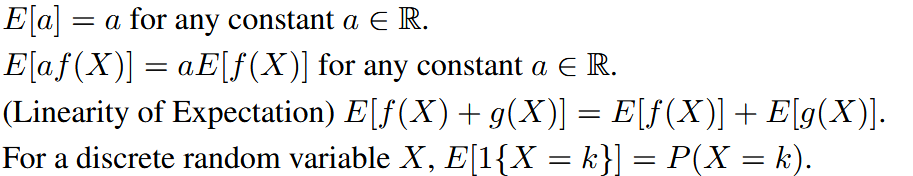

properties: | ||

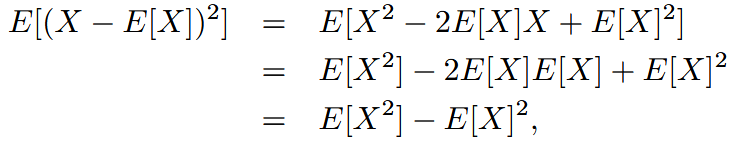

| variance | 方差 | The variance of a random variable  properties: |

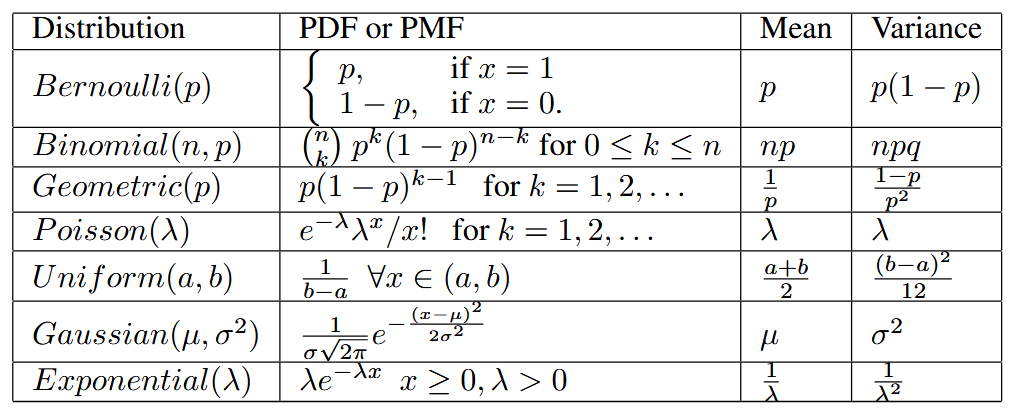

| Some common random variables | ||

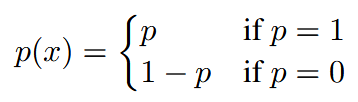

| Bernoulli | 伯努利分布 |  |

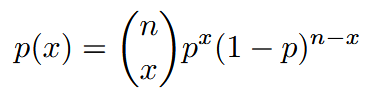

| Binomial | 二项分布 |  |

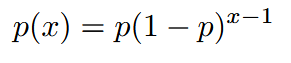

| Geomatric | 几何分布 |  |

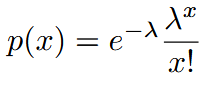

| Poisson | 泊松分布 |  |

| Continuous random variables | ||

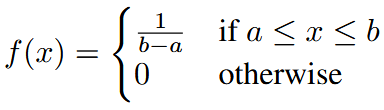

| Uniform | 均匀分布 |  |

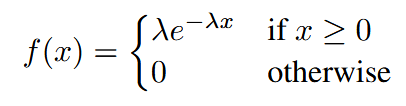

| Exponential | 指数分布 |  |

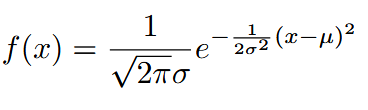

| Normal | 正态分布 |  |

| ||

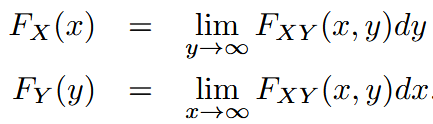

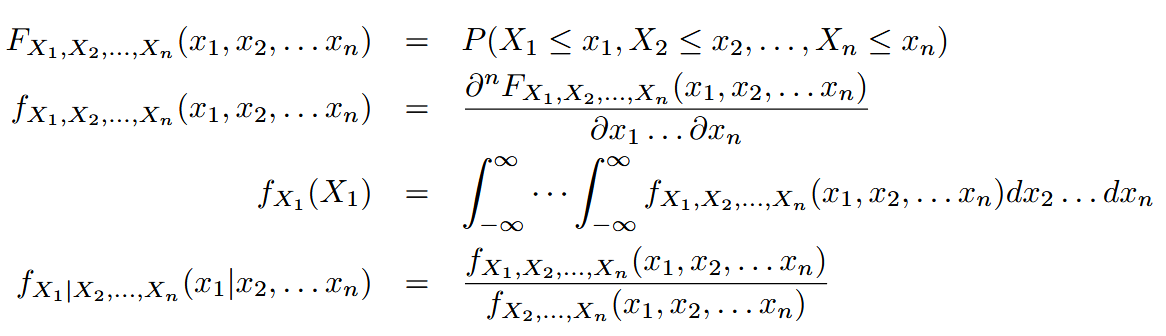

| joint cumulative distribution function | 联合累积分布函数 | |

| marginal cumulative distribution function | 边缘累积分布函数 |  |

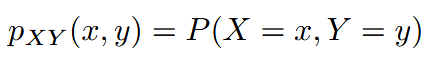

| joint probability mass function (for discrete random variable ) | 联合概率质量函数 |  |

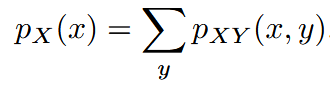

| marginal probability mass function (for discrete random variable ) | 边缘概率质量函数 |  |

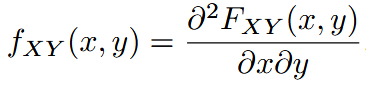

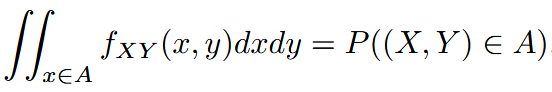

| joint probability density function | 联合概率密度函数 |   |

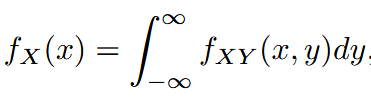

| marginal probability density function | 边缘概率密度函数 |  |

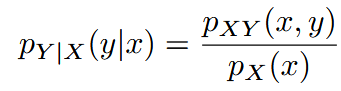

| conditional distributions | 条件分布 |  |

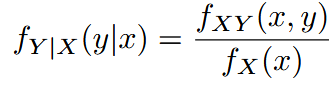

| conditional probability density | 条件概率密度 |  |

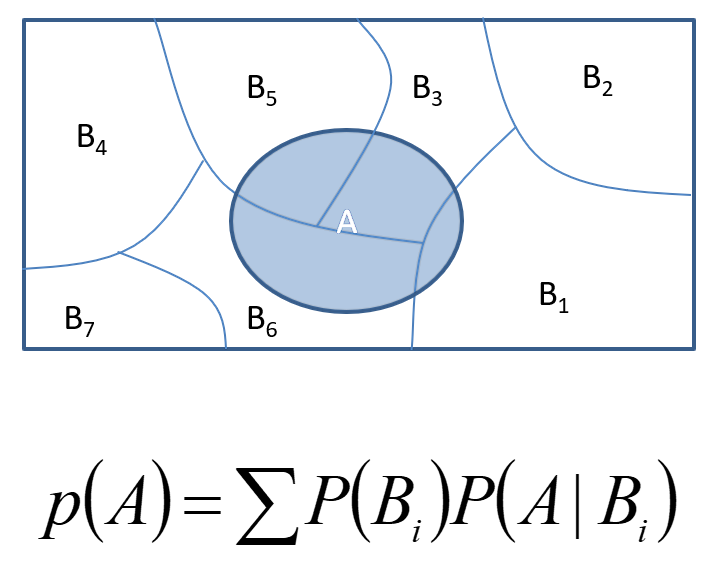

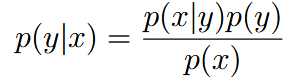

| bayes’s rule | 贝叶斯公式 | |

| ||

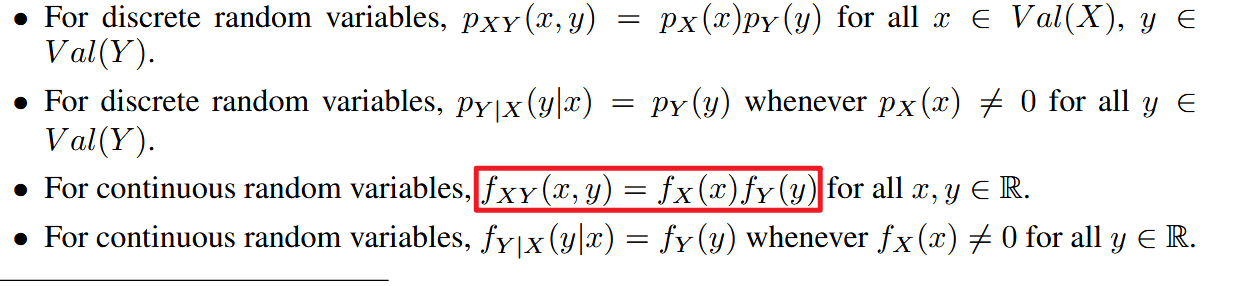

| independent | 独立(这里说的是多个随机变量) | |

| ||

| if | ||

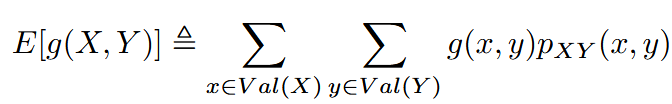

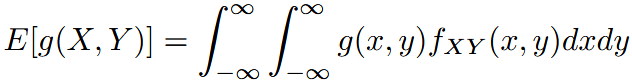

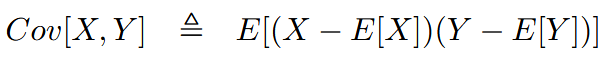

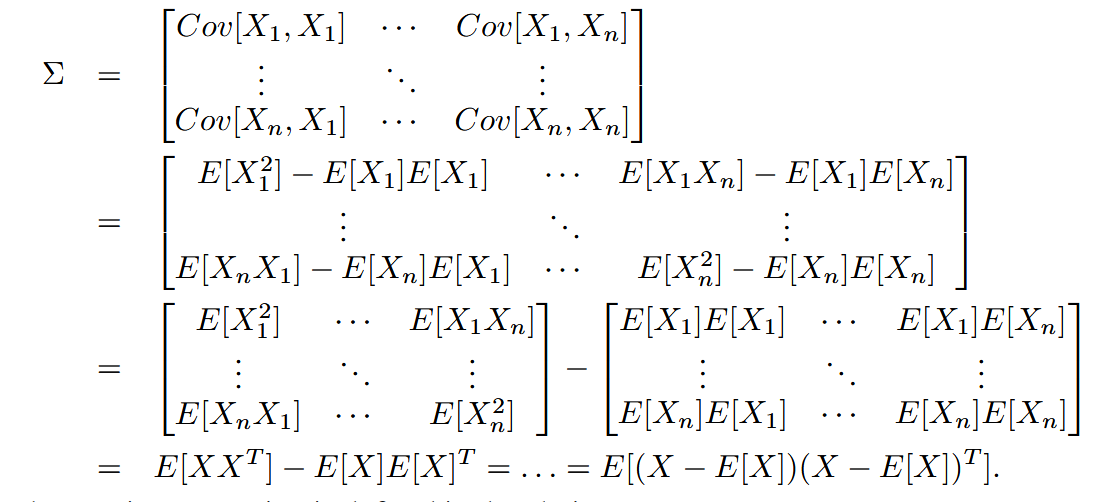

| expectation and covariance | 期望与协方差(多随机变量) |   |

| ||

| if | ||

For more random variables | ||

this is for random vector and covariance matrix | ||

| rule of total probability | 总概率法则 |  |

Machine Learning

| English | 中文 | Complementary |

|---|---|---|

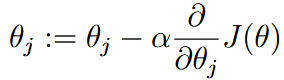

| gradient descend | 梯度下降 |  |

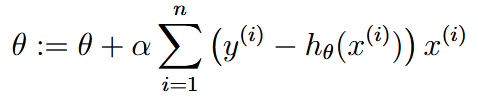

| batch gradient descend | 批量梯度下降 |  |

| For gradients, the loss function is only one global, and no other local, optima. | ||

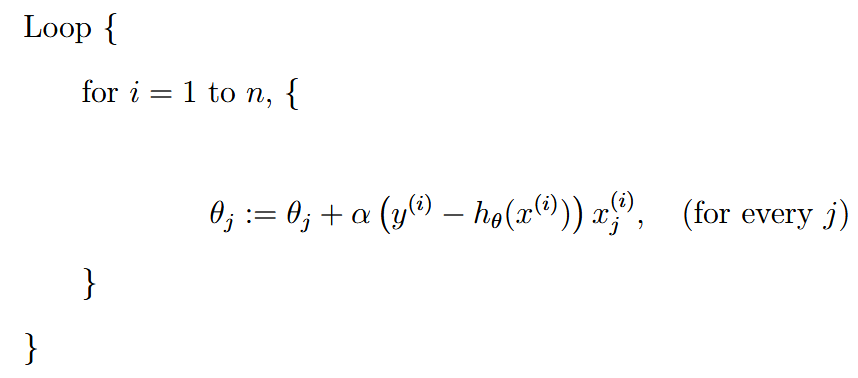

| stochastic gradient descent (incremental gradient descent) | 随机梯度下降(增量梯度下降)SGD |  一次只看一个,看一个更新一次 Often, stochastic gradient descent gets θ “close” to the minimum much faster than batch gradient descent. 当训练集非常大的时候,SGD每次更新都能看到效果,所以很不错。此时一次性更新一个训练集的批量梯度下降的开销太大 |

| least squares | 最小二乘法 | 前面的梯度下降需要迭代,这里使用最小二乘法直接算出θ的封闭解 |

| independently and identically distributed= | 独立同分布 | |

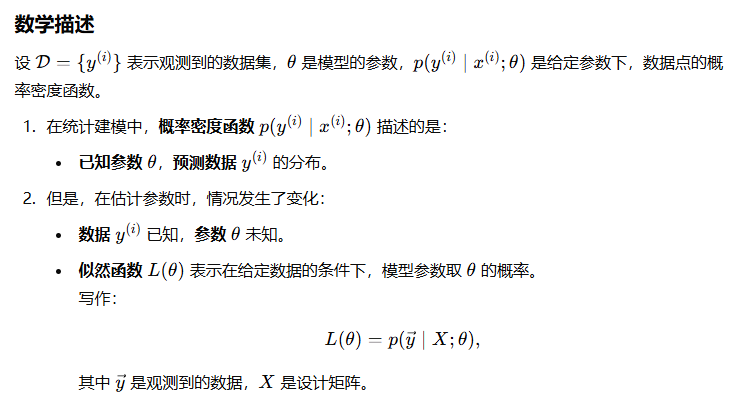

| likelihood | 似然函数 |  就是倒过来,数据集中已知x和y的情况。 说白了就是找到使得p最大的参数θ |

| maximum likelihood | 最大似然 | we should choose θ so as to make the data as high probability as possible. I.e., we should choose θ to maximize 为了方便计算,一般会使用对数似然函数,就是说白了取个log |

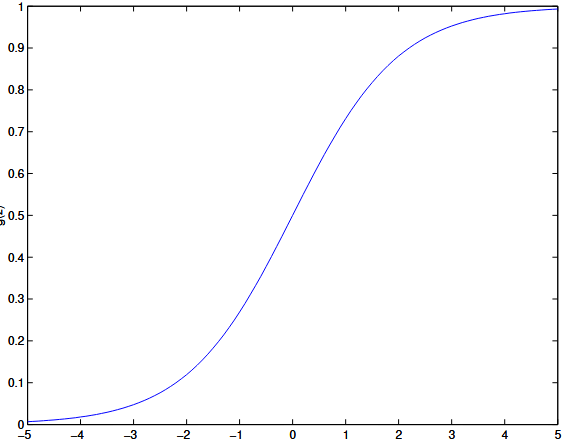

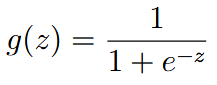

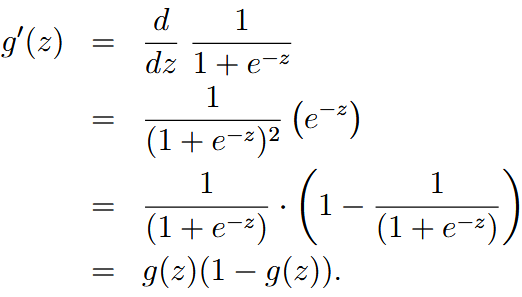

| sigmoid function / logistic function |    | |

| regression | 回归 | |

| multinominal distribution | 多项分布 | |

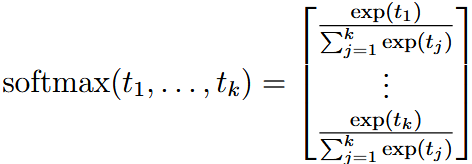

| softmax | 归一化操作 |  We usually call the |

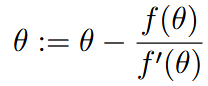

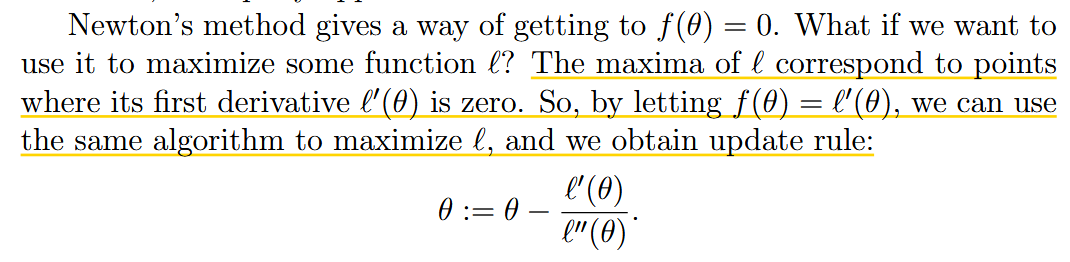

| Newton’s method | 牛顿法 | 也是一个优化方法 找0点  通过寻找二阶导的零点来找寻梯度为0的点,进而寻找极值进行优化(找最大值需要让hessian矩阵为正,也就是二阶导或者说曲率为正,反之相反)  详见pdf |

| generalized linear models | 广义线性模型(GLM) | |

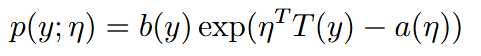

| exponential family | 指数族 |  如果能被写成这个形式,那么一个分布就可以被划为指数族 详见pdf |

| discriminative learning algorithms | 判别式学习算法 | 求 |

| generative learning algorithms | 生成式学习算法 | 建模学习 |

| class priors | 类先验 | 类先验p(y)是指在没有任何观测数据x的情况下,某一类别y出现的概率。 (利用贝叶斯来计算最后的值) (利用贝叶斯来计算最后的值)这里 这里 |

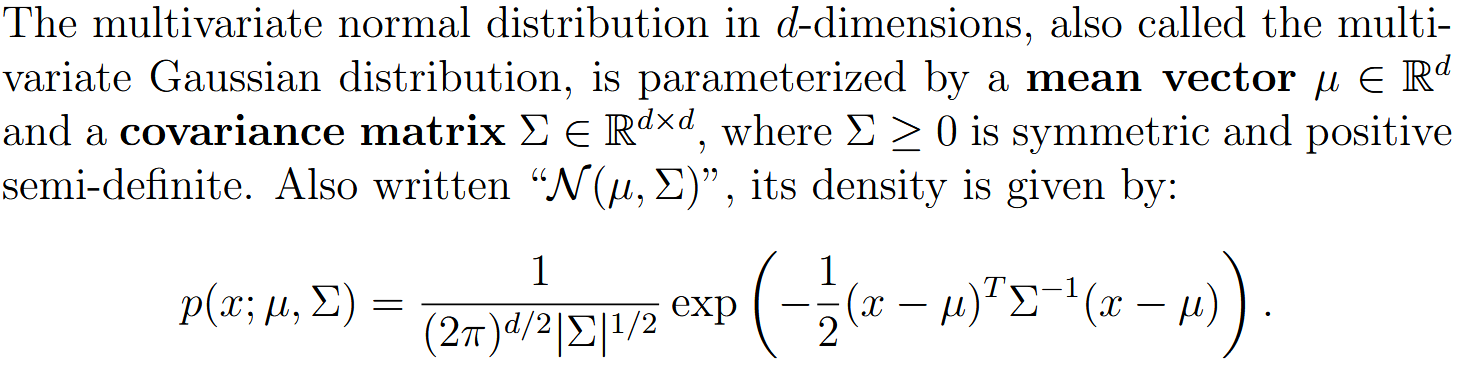

| multivariate normal distribution | 多随机变量正态分布 |  |

| gaussian discriminant analysis (GDA) | 高斯判别分析 | 详见pdf 4.1.2 基本就是一个multivariable normal distribution的generative learning algorithm |

| GDA makes stronger modeling assumptions, and is more data efficient (i.e., requires less training data to learn “well”) when the modeling assumptions are correct or at least approximately correct. Logistic regression makes weaker assumptions, and is significantly more robust to deviations from modeling assumptions GDA有着更强的假设,也就意味着更弱普适性 GDA数据效率更高,但是适合的数据类型少,实际应用中逻辑回归更为常见 | ||

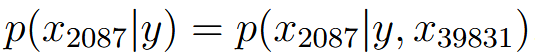

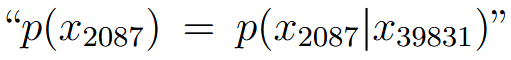

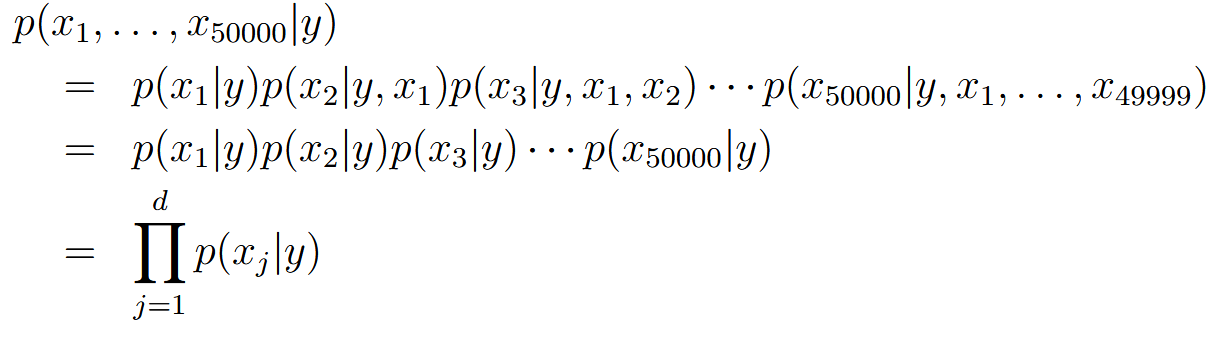

| Naive Bayes (NB) assumption | 朴素贝叶斯假设 | 计算 这个时候就需要假设:  记住是在given y的情况下,也就是说  并不一定成立 |

| Naive Bayes classifier | 朴素贝叶斯分类器 |  |

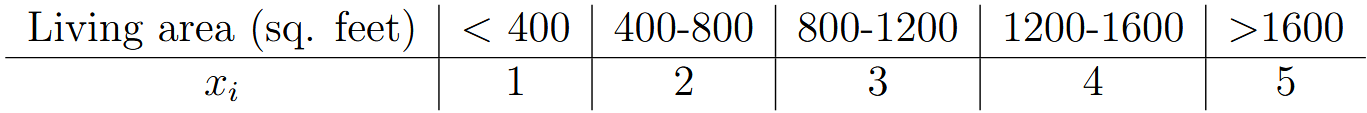

| discretize | 离散化 |  convert continuous variables to discrete variables (usually when using naive bayes to continuous variables) |

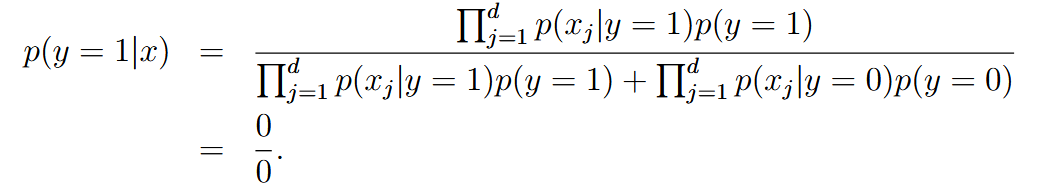

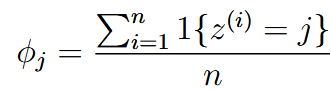

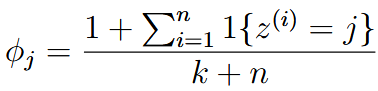

| laplace smoothing | 拉普拉斯平滑 | Sometimes the input may never appearing the training set, which will cause all the result to 0 or 0/0 like: To mitigate it:   |

| numerator | 分子 | |

| denominator | 分母 | 拉普拉斯平滑分母上加的k通常是所有样本数目,因为必须要保证概率和为1 |

| cubic function | 三次函数 | |

| RHS | 右边 | right hand side |

| kernel trick | 核技巧 | 通过换元避免在高维空间中进行大量的计算(详见pdf 5.3) |

| kernel的最后一节最后完全没看懂 重新看一遍 | ||

| support machine learning | 支持向量机 | |

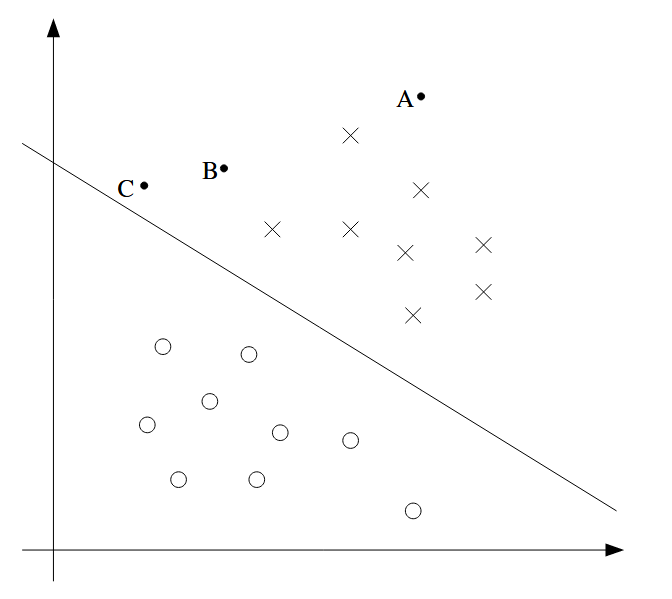

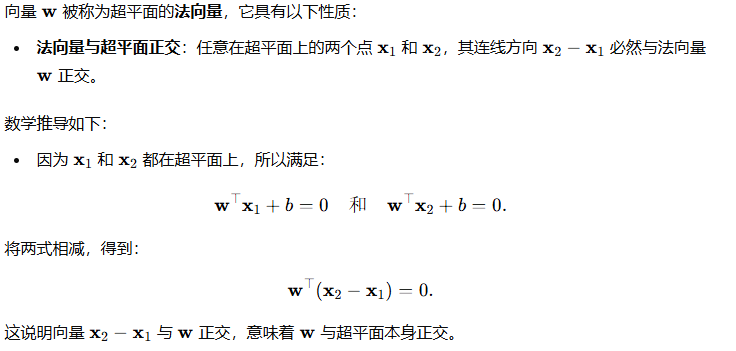

| separating hyperplane | 分离超平面 |  图中斜线 |

| margin | 边距 | 离”separating hyperplane“的“距离”,一般而言点离这个分界线越远,对于该点的预测更加confident |

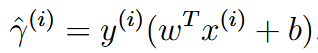

| functional margin | 功能边距 |  该值为正的时候预测正确(负负或者正正),为负的时候错误(一正一负)。同样希望这个距离很大,这样更加confident |

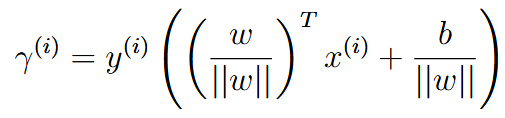

| geometric margin | 几何边距 |   具体推导看63页 |

| 把这个边距 | ||

| lagrange duality | 拉格朗日对偶性 | |

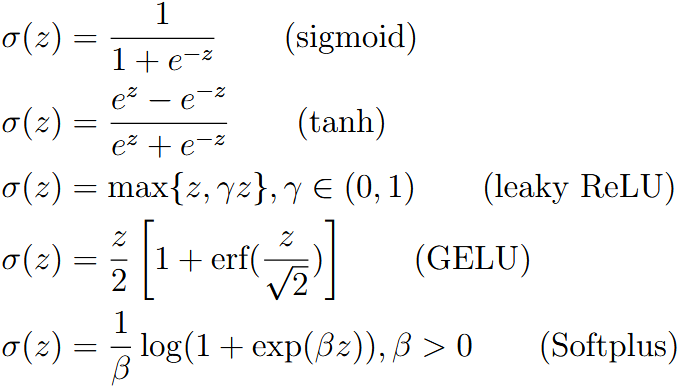

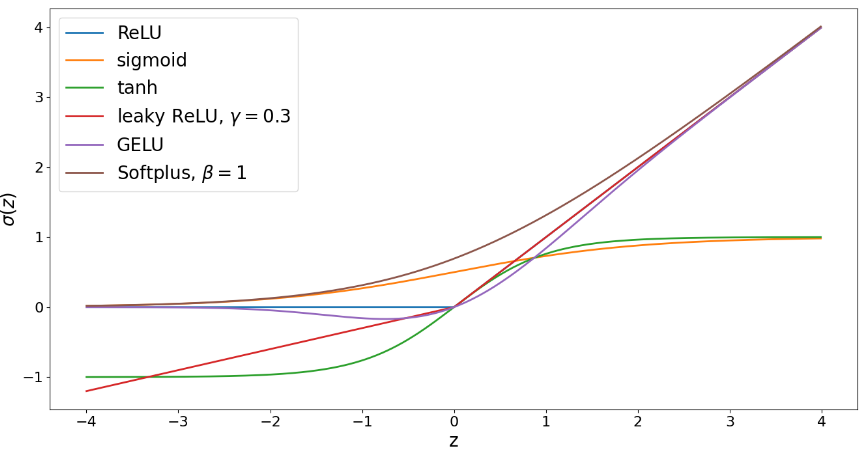

| activation function | 激活函数 | Typically, for multi-layer neural network, at the end, near the output, we don’t apply ReLU (or other activation functions), especially when the output is not necessarily a positive number  NOTE: the |

| relu | 某种激活函数 | |

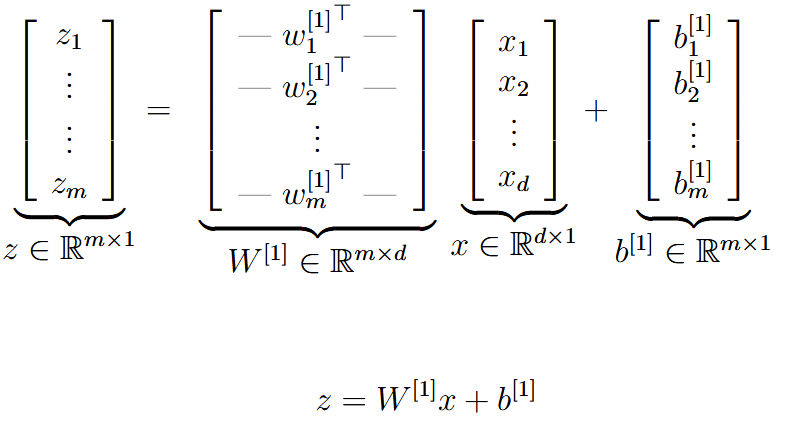

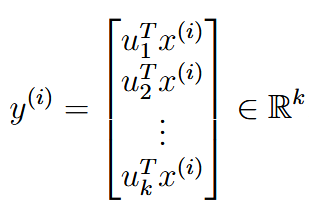

| vectorization | 向量化 | 如果一个neural network里面每次参数与x计算都单独算的话,会大量地使用for循环。这样计算效率会非常低。所以使用线性代数来进行向量化,加快并行计算 |

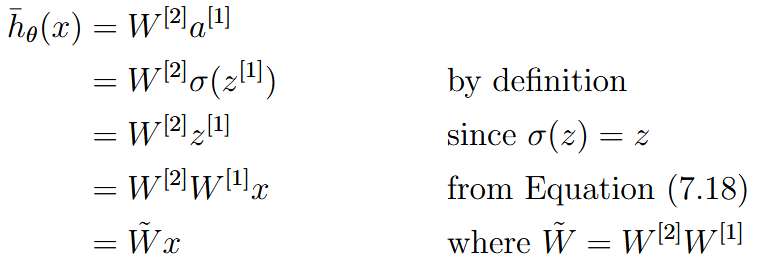

| identity function | 恒等函数 |  (apply a linear function to another linear function will also end to a new linear function) |

| feature engineering | 特征工程 | the process of designing the feature maps We can view deep learning as a way to automatically learn the right feature map (sometimes also referred to as “the representation”) 神经网络通常不用人们像传统的特征工程里面去精心根据领域知识(domain knowledge)去设计特征映射,每一个神经元可以自己学习 |

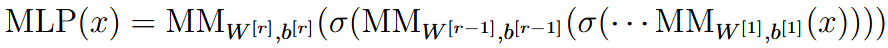

| multi-layer perceptron | MLP |  |

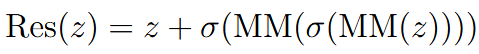

| residual connection | 残差连接 |  |

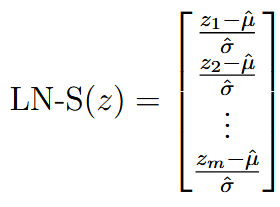

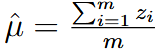

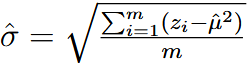

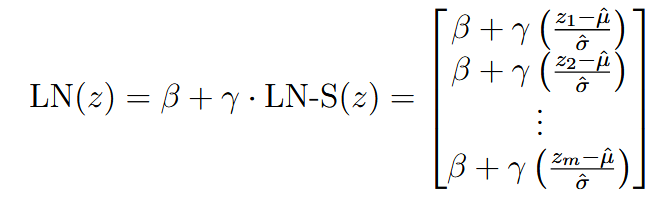

| layer normalization (LN) |    | |

| 这里求解deviation的时候除的m而不是m-1,是因为想让LN输出的和为1 | ||

Oftentimes zero mean and standard deviation 1 is not the most desired normalization scheme | ||

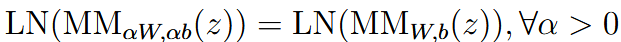

| scaling-invariant property | 放缩不变性 |  |

| when we talk about the weights’ scaling-invariant property, we usually talk about the weights except the last layer. Because usually we do not do normalization to the last layer (same as the activation function) | ||

| Batch normalization and group normalization are more often used in computer vision applications whereas layer norm is used more often in language applications. | ||

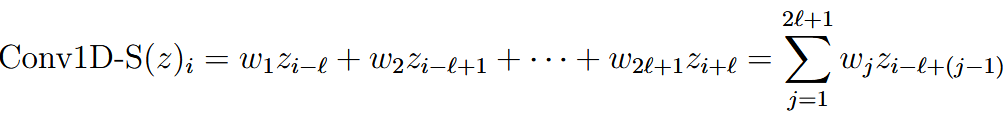

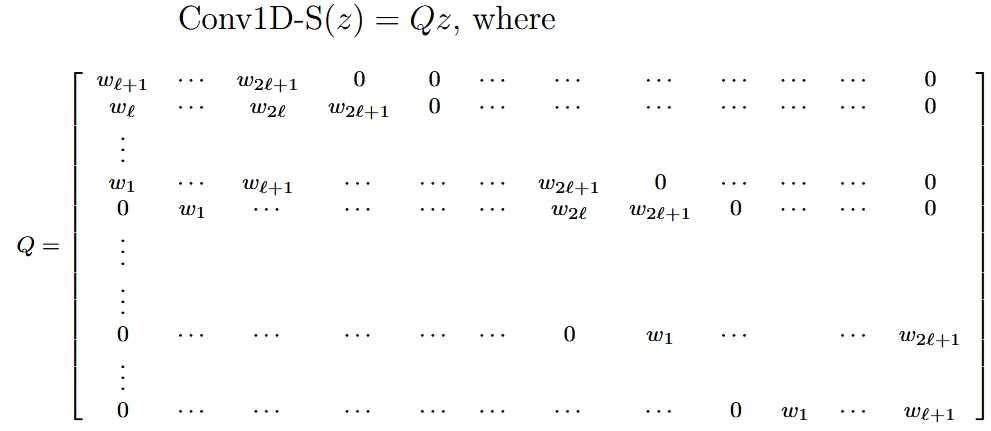

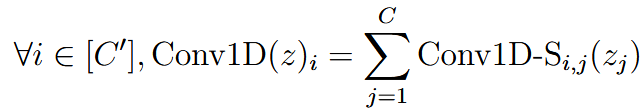

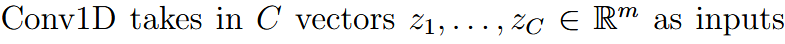

| convolutional layers | 卷积层 | For one dimension: We assume the  对于超出原始z的值,padding为0  multiple channel version:   |

| 一维卷积往往用于NLP领域,二维卷积往往用于视觉处理 | ||

| backpropagation | 反向传播 | |

| partial derivatives | 偏导 | |

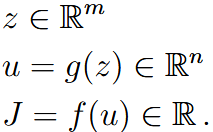

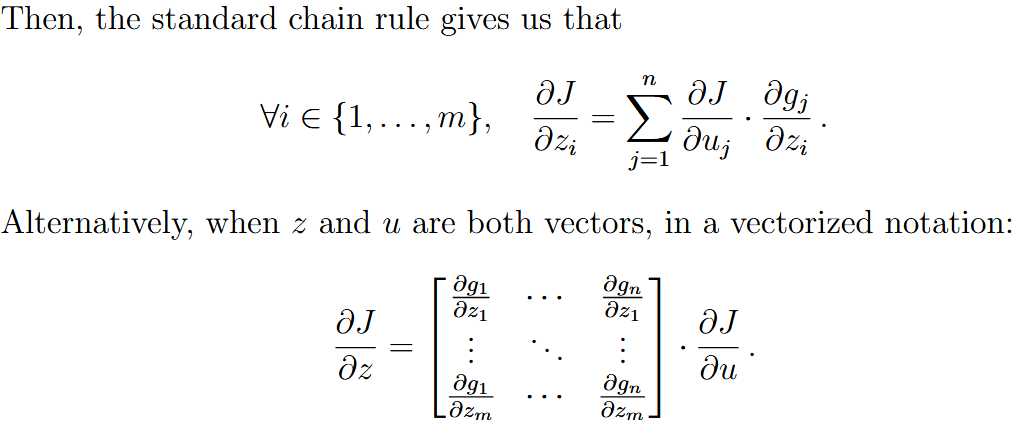

| chain rule | 链式法则 | background:  chain rule (for scalars and vectors):  for simplicity:  |

| Backpropagation contains two stages: forward pass and backward pass. During the forward pass, it will save all the intermediate variables | ||

| generalization | 泛化性 | |

| overfit | 过拟合 | the model perform well on training set but not that good on test set |

| underfit | 欠拟合 | the model perform not good even training set |

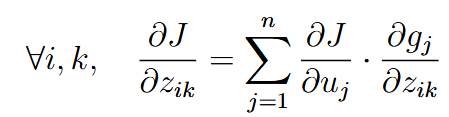

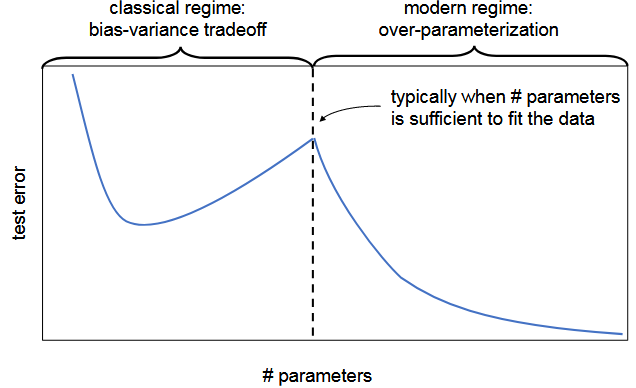

| bias-variance tradeoff | 偏差-方差权衡 | |

| bias | 偏差 | the bias captures the part of the error that are introduced due to the lack of expressivity of the model 很多时候是模型不够强大导致,比如使用一次函数预测二次函数生成的数据集。也可能是数据集不够。总而言之就是欠拟合 |

| variance | 方差 | The variance term captures how the random nature of the finite dataset introduces errors in the learned model (It measures the sensitivity of the learned model to the randomness in the dataset. It often decreases as the size of the dataset increases.) 可能是因为模型过于强大(数据量不足会加剧这个),导致其捕捉了很多无关信息,比如数据生成过程的噪声。说白了就是过拟合 |

Often, there is a tradeoff between bias and variance. If our model is too “simple” and has very few parameters, then it may have large bias (but small variance), and it typically may suffer from underfittng. If it is too “complex” and has very many parameters, then it may suffer from large variance (but have smaller bias), and thus overfitting. 数学推导看pdf121页 | ||

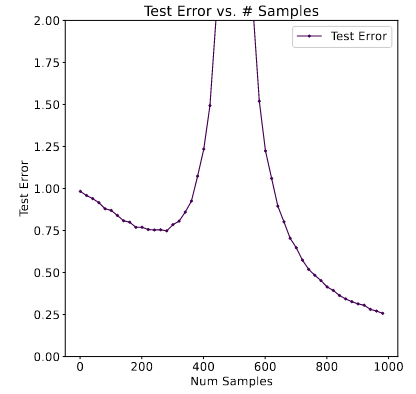

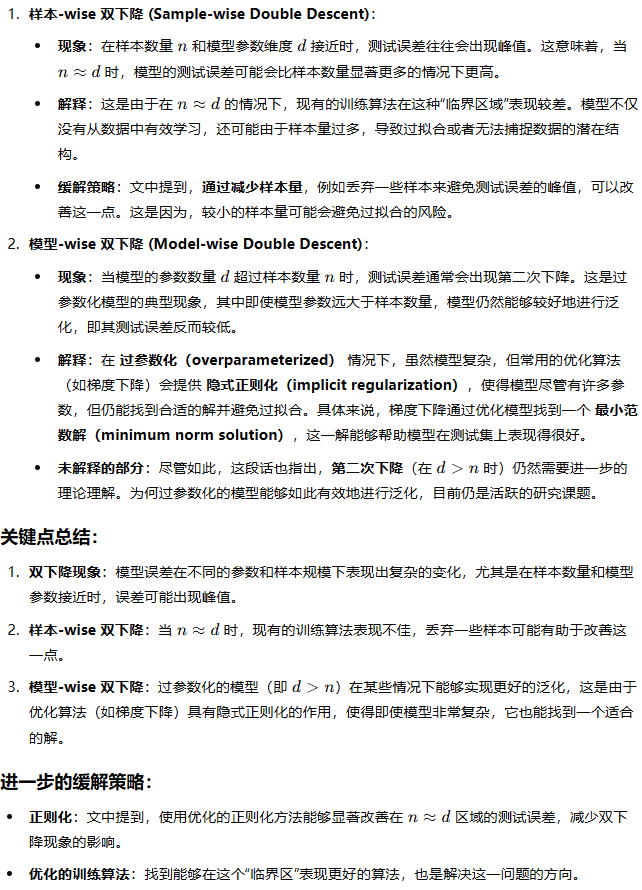

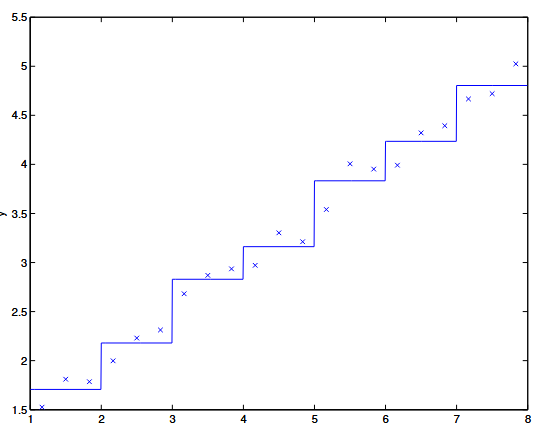

| double descent phenomenon | 双下降现象 | 模型的error分为两部分,bias和variance,一般而言,随着模型参数量增加,error会呈现出一个先下降后增加的趋势(convex)。然而有时候会下降两次  对于训练数据同理。图一model-wise,图二sample-wise |

To some extent, sample-wise double descent and model-wise double descent are essentially describing similar phenomena—the test error is peaked when n ≈ d. | ||

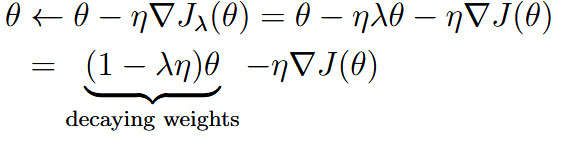

| regularization | 正则化 | the regularizer |

| weight decay | 权重衰减(欧几里得范数正则) |  |

| ||

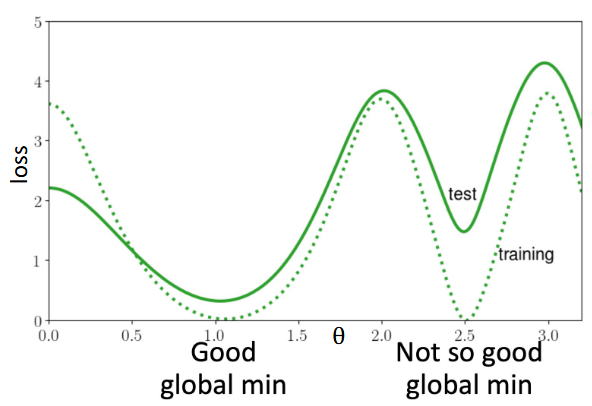

| implicit regularization effect | 隐式正则化效果 | optimizers can implicitly impose structures on parameters beyond what has been imposed by the regularized loss 正则化的训练loss也许不止一个全局最优解,即使在训练集上同样效果,但是有的优化器可能会因为将模型变得更加稀疏或怎样,使得其在测试集上效果更好  |

| In summary, the takehome message here is that the choice of optimizer does not only affect minimizing the training loss, but also imposes implicit regularization and affects the generalization of the model. Even if your current optimizer already converges to a small training error perfectly, you may still need to tune your optimizer for a better generalization. | ||

| Lipschitz | more Lipschitz model: 更平滑的模型,模型在输入空间中对输入变化的响应更为平缓或稳定。 | |

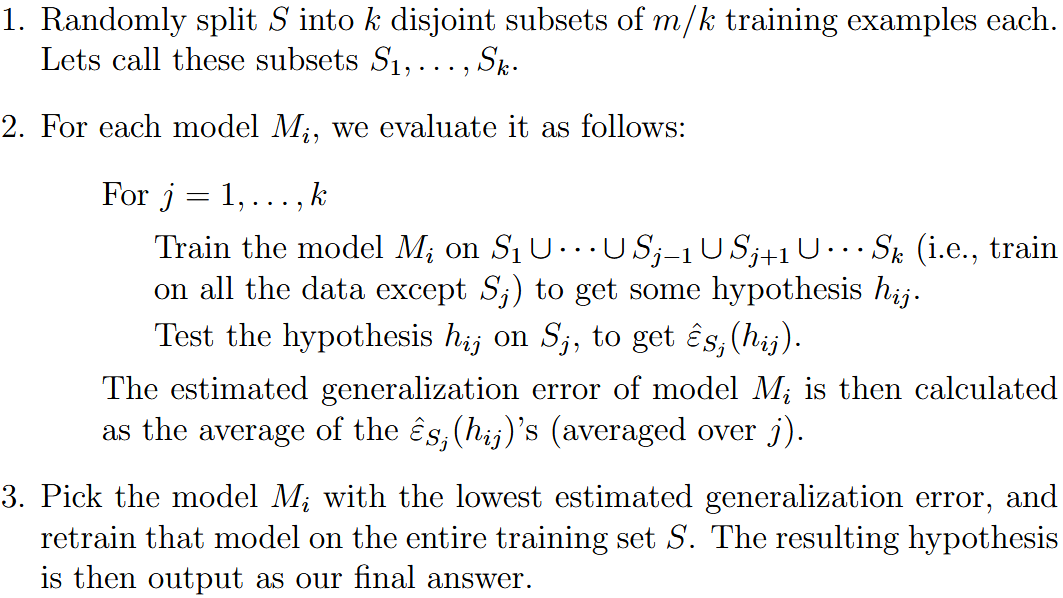

| cross validation | 交叉验证 | 说白了就是把数据分成训练集和测试集,在训练集上训练,然后选择测试集上误差最小的模型。 |

| k-fold cross validaition | k折交叉验证 | 普通交叉验证要浪费一部分数据,因为测试集不能用于训练。 K折:  |

| leave-one-out cross validation | 留一法交叉验证 | 数据实在太少的时候,k就等于数据量。每次只留一个数据 |

| maximum likelihood estimation (MLE) | 最大似然估计 |  |

| this is used to estimate the parameter | ||

| clustering | 聚类 | 只有x没有y,是一种unsupervised learning problem n the clustering problem, we are given a training set {x(1), . . . , x(n)}, and want to group the data into a few cohesive “clusters.” |

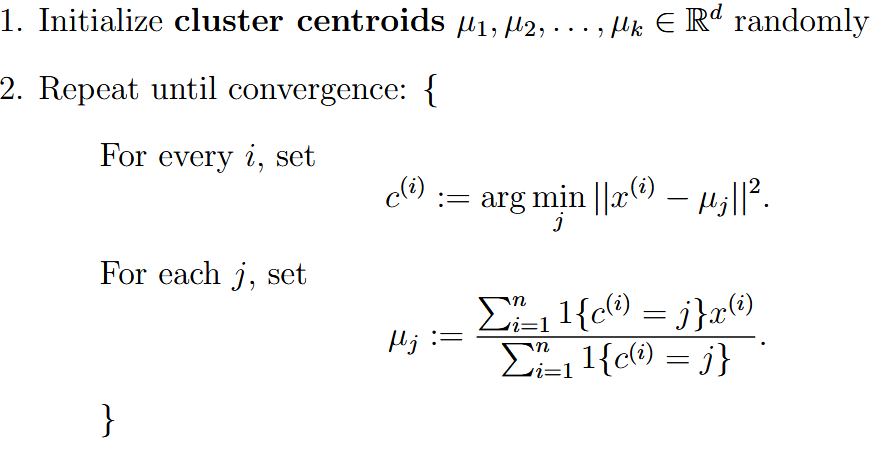

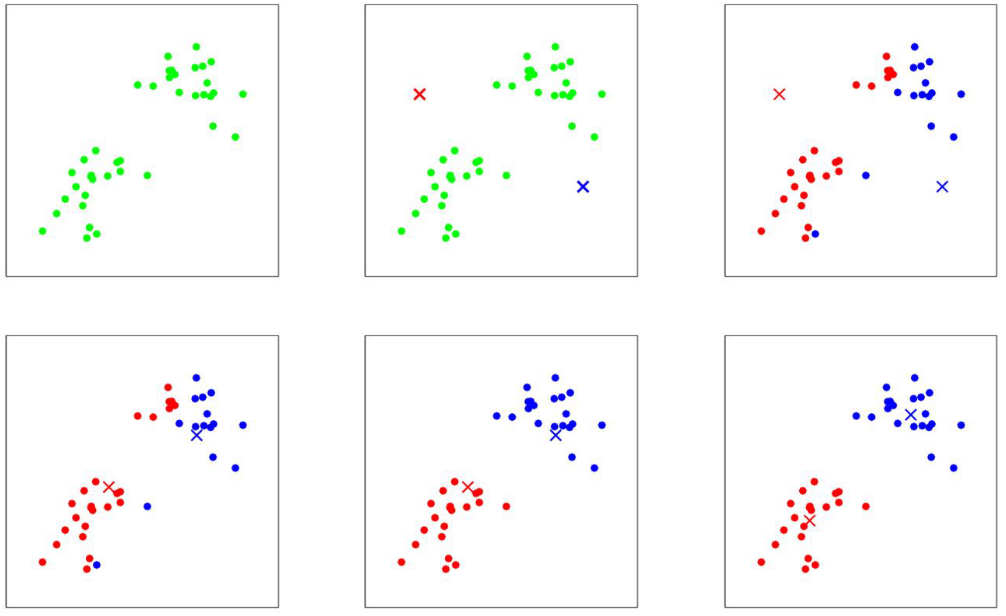

| k-means | k近邻 |  先随机选k个中心点。根据距离聚类,每一类产生一个新的中心点,直到收敛。  |

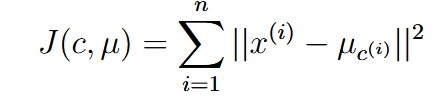

| distortion function | 畸变函数 |  J的值随着kmeans的循环是单调递减的,所以kmeans一定收敛 但是kmeans也有可能只是局部最佳,可以多次运行更换初始值避免 |

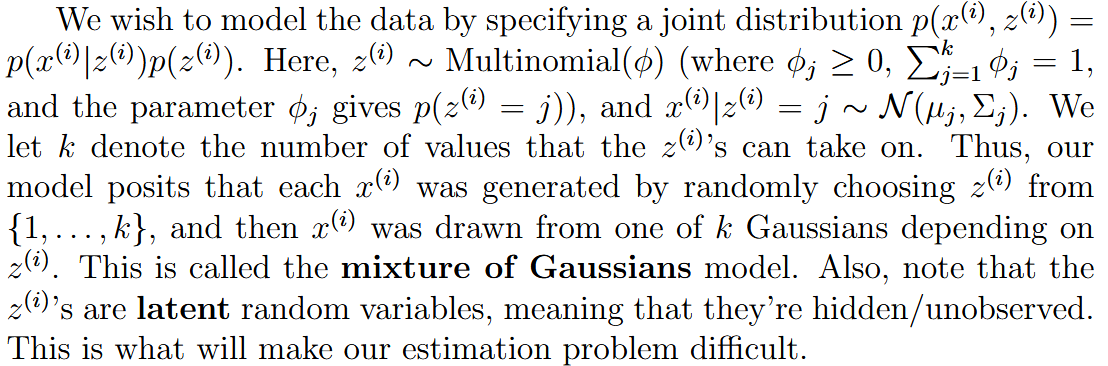

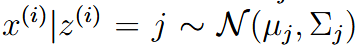

| Mixture of Gaussians | 混合高斯模型 | background: 就是说我们只能观测到x,但是x是由隐变量z决定的正态分布产生的  每个x对应一个隐藏的不可观测的z所对应的高斯分布,所以是混合高斯模型  |

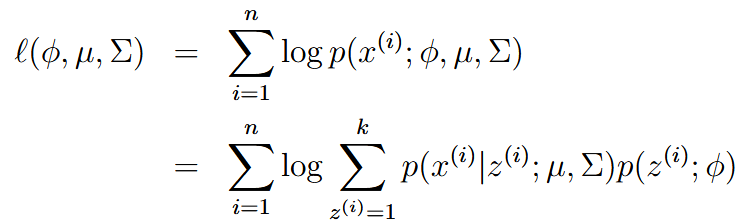

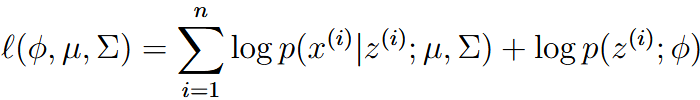

| latent random variable | 隐变量 | 隐变量z决定了生成x的高斯分布,如果我们知道z是什么,那么上面的似然估计就可以写成: 这里的优化问题就和gaussian discriminant analysis很像了,这里的z就像是GDA里面的y。 但是!不一样的是!我们这里并不知道z! |

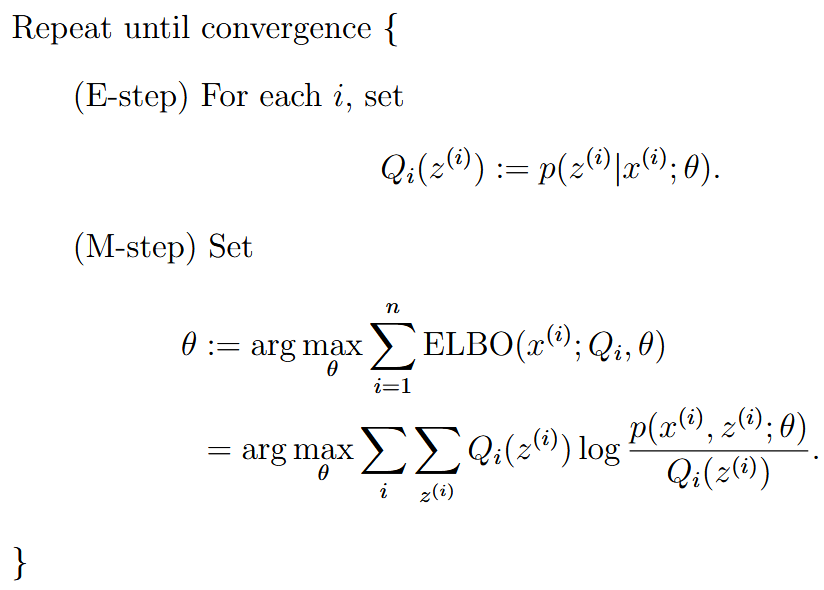

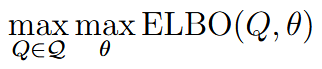

| evidence lower bound | ELBO | |

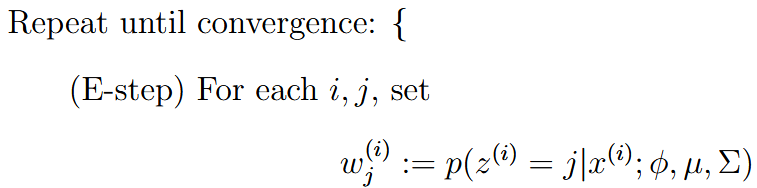

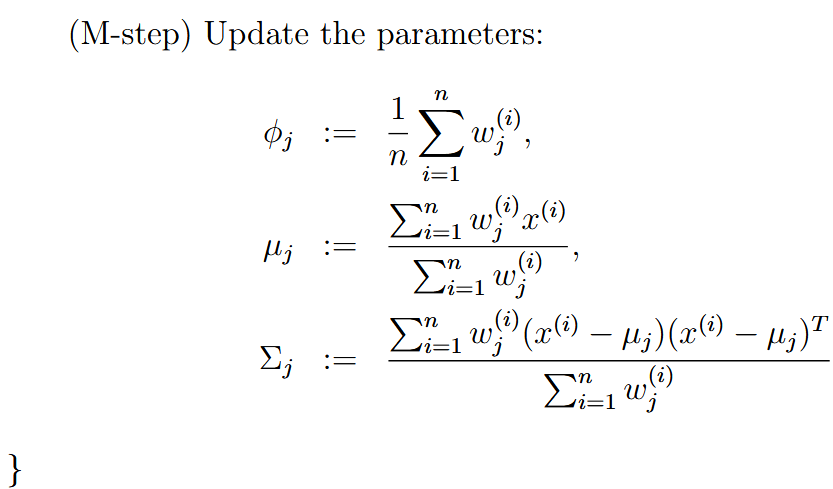

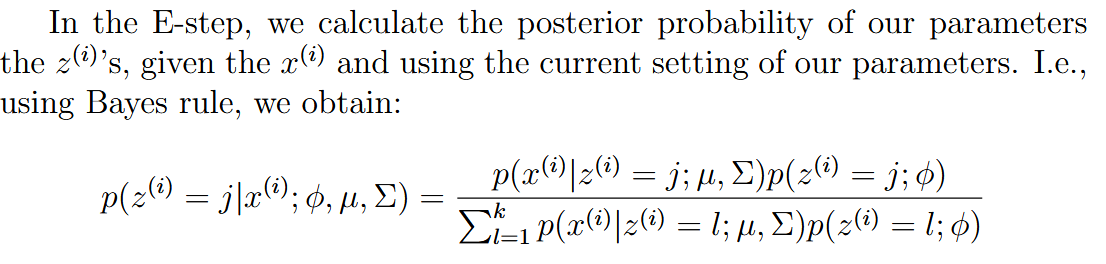

| EM algorithm (expectation-maximization) | 期望最大化算法 | 分为E和M两步,E步的时候就直接猜z的值,M步的时候就要假装E猜的是对的,然后进行优化   (这里E的时候也不是乱猜的,是使用的贝叶斯去算的z的后验概率,然后把这个后验概率假装是正确的) |

| EM和kmeans其实很相似。不同之处在于kmeans在初始化(可以看作第一个E步的时候)使用hard guess,直接随机初始化了几个点。而EM在E的时候(*这里)使用的是soft guess。 EM同样会有可能遭遇到局部最优的情况 | ||

使用z关于x的后验概率当作z的分布(soft guess)(推导见:pdf 11.3) 这里其实就是下界优化,最大化ELBO | ||

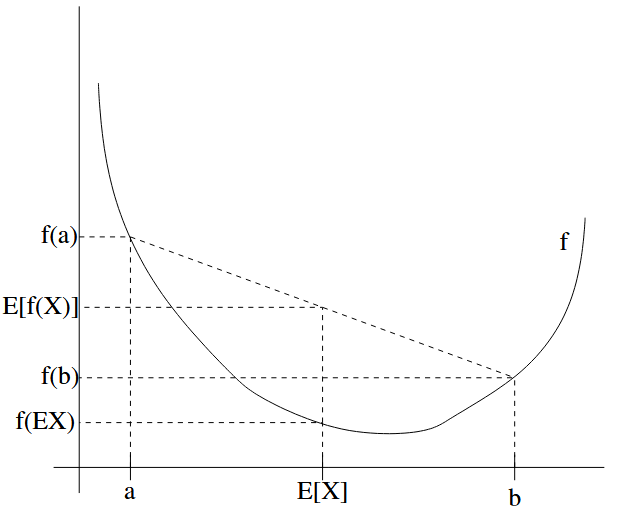

| Jensen’s inequality | 詹森不等式 | 如果 如果这个函数的hessian矩阵式半正定 (positive semi-definite),那么他是凸函数,如果是正定的,那么是严格凸函数 对于凹函数同样适用,把符号改个方向就好了 |

| concave/convex function | 凹函数/凸函数 | 这里的理解和中文语境里面的凹凸其实是相反的 |

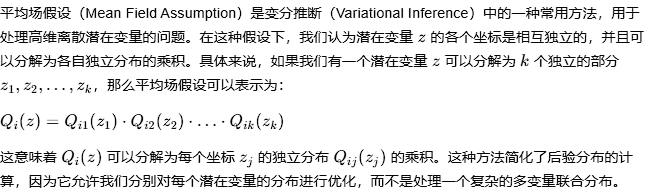

| variational inference | 变分推理 | 在EM算法中,我们是有posterior probability p(z|x, θ),来当作z的概率,但是实际情况中这个可能会十分难以计算。所有变分推理中寻找approximation of the true posterior distribution 在一个分布族 |

| mean filed assumption | 平均场假设 | 对于高维discrete random variable z来说,使用平均场假设 |

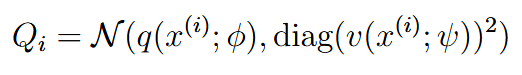

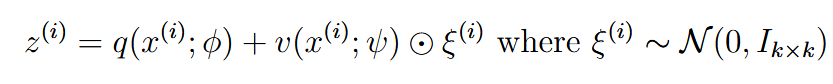

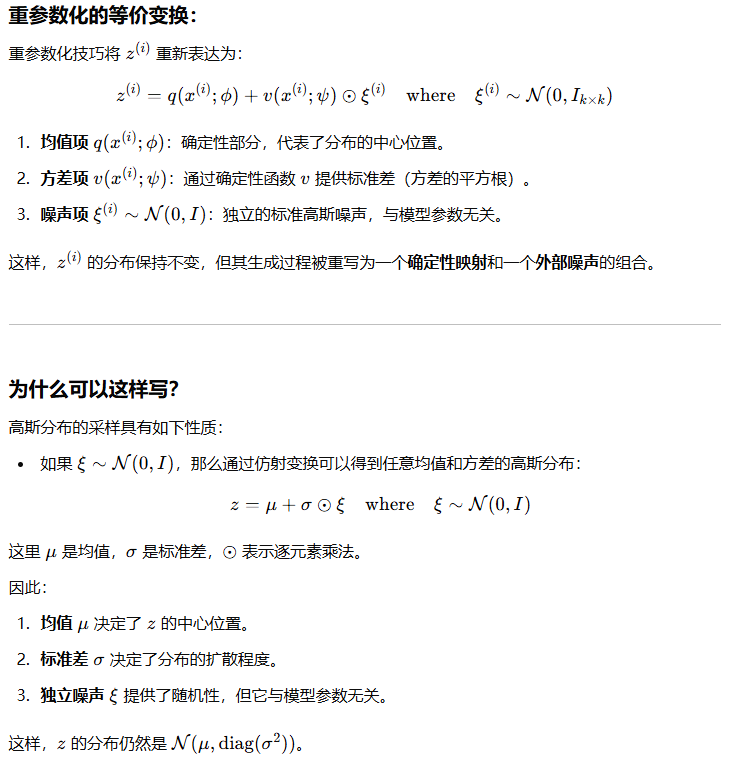

mean filed assumption仅仅针对于离散的,对于continuous random variable而言 q v分别是两个函数 | ||

| re-parameterization | 重参数化 |   |

| 变分推导和变分自编码器看pdf 13.5,目前没太看懂 大概意思是使用一个Q的估计,因为Q太难算了。但是这个估计的形式我没看懂 | ||

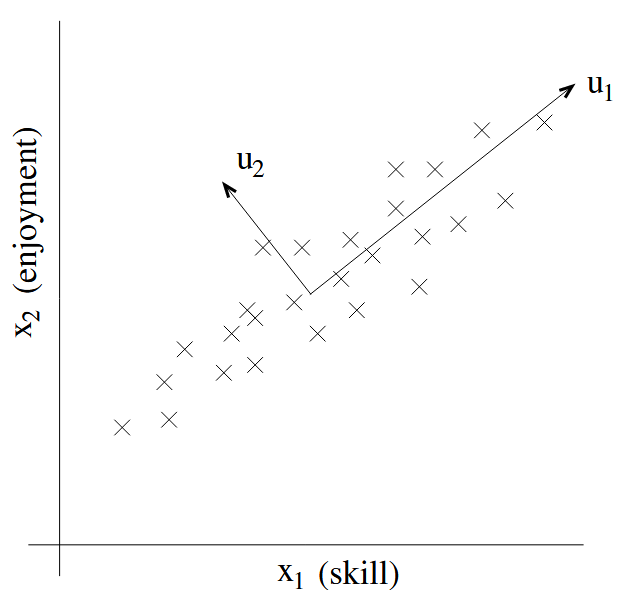

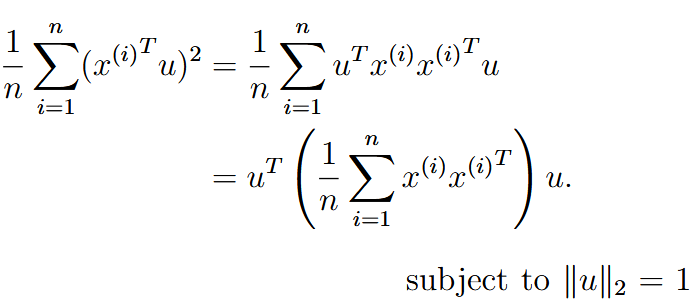

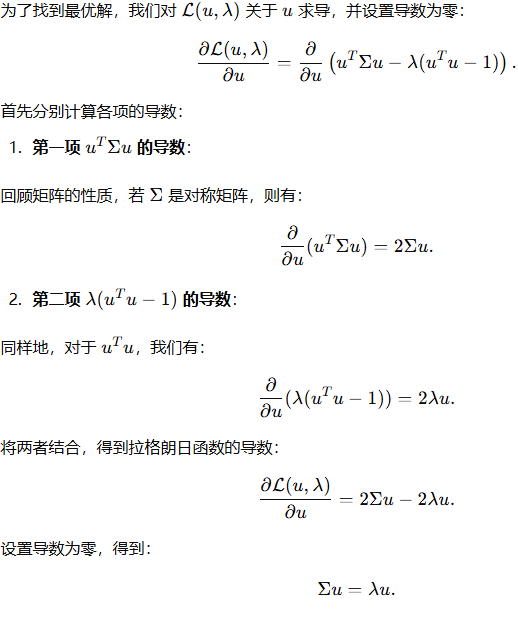

| principal components analysis (PCA) | 主成分分析 | 数据压缩 to find the major axis of variation |

| Before PCA, first normalize the data by | ||

| ||

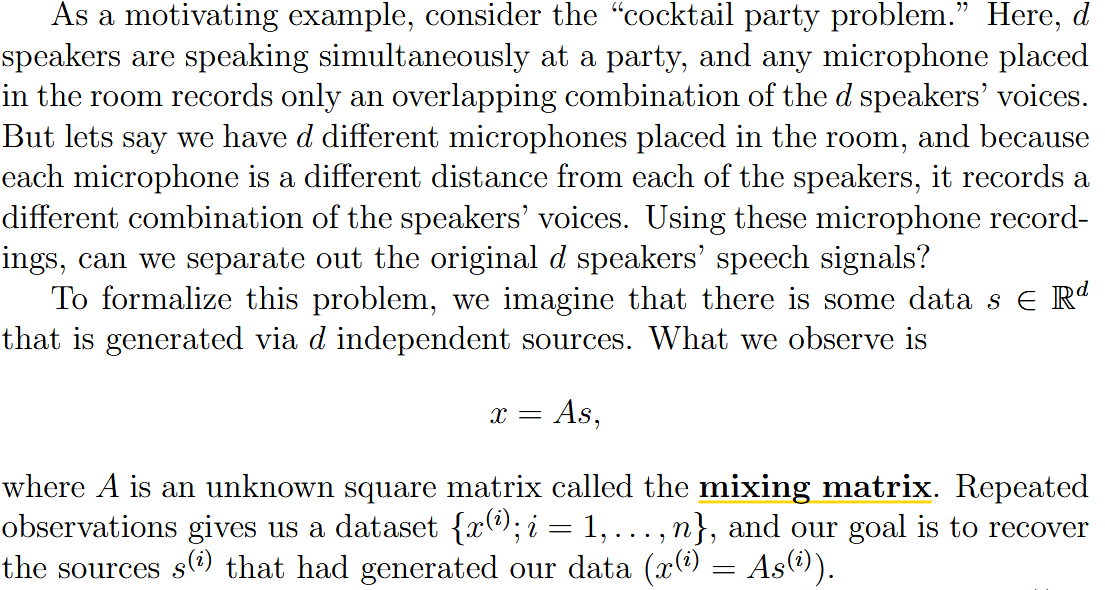

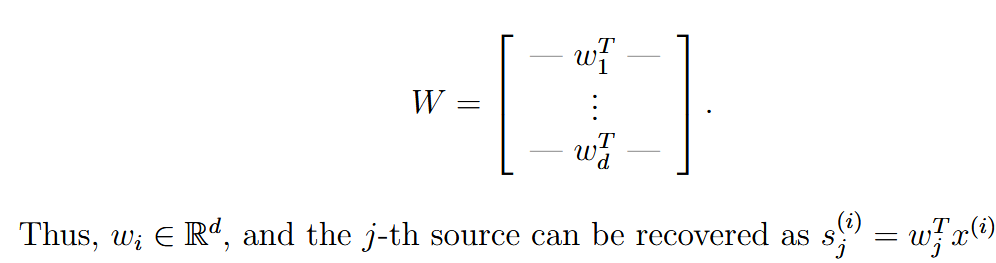

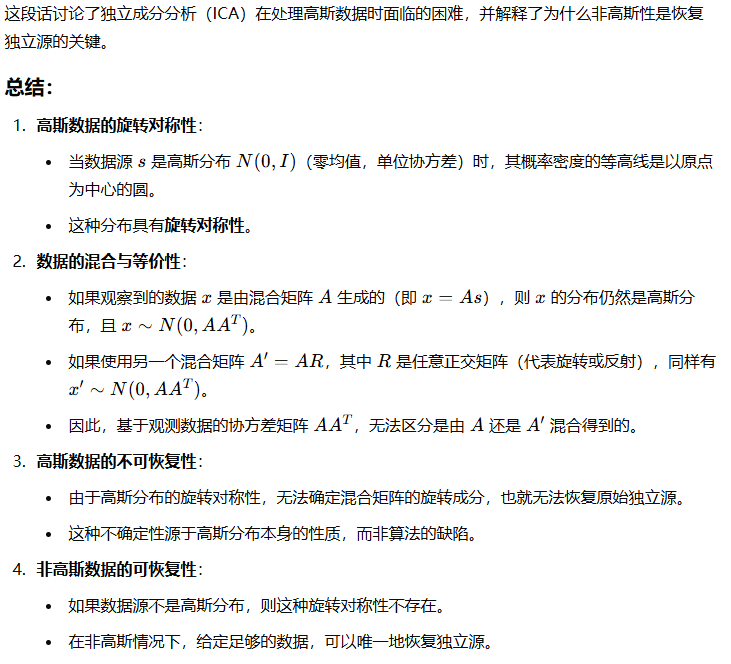

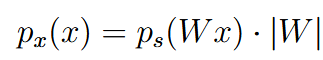

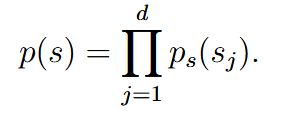

| independent components analysis (ICA) | 独立成分分析 | background: 这里s是说话的人,A是混合,x是最后录入的结果,目标是复原s,也就是找到  |

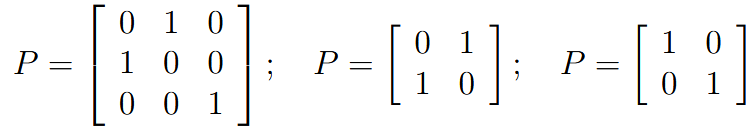

| permutation matrix | 排序矩阵 | 如: 每一行每一列有且仅有一个1 |

| ICA ambiguities | ICA 模糊性 | pdf 13.1 大概意思就是对W的scale很多情况下不影响,即使还原的s会不同,比如放缩或者正负。但是放缩这些对于声音数据来说就是调节了一下音量。很多别的应用场景这个同样不影响 |

高斯分布的旋转对称性导致无法通过 ICA 恢复原始独立源,但只要数据源是非高斯分布,就可以消除这种不确定性,从而唯一确定独立源。 | ||

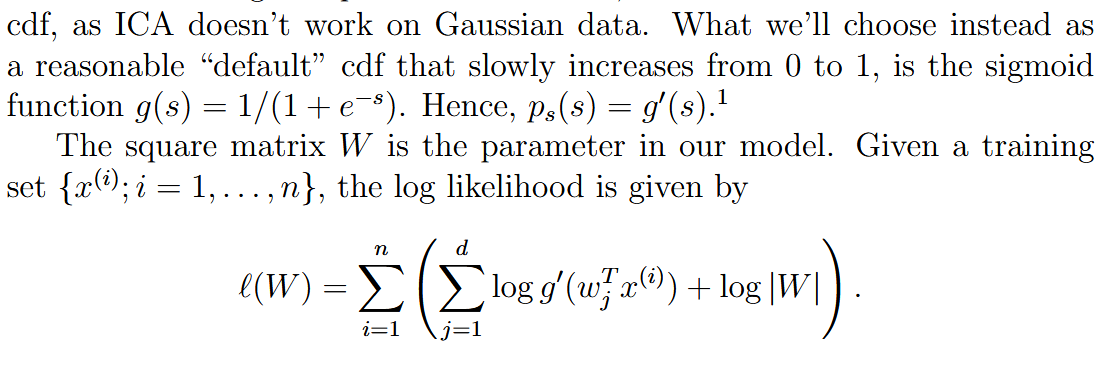

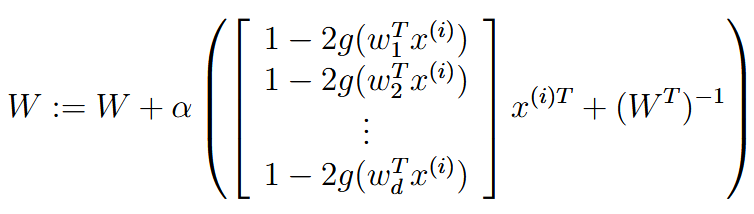

| ||

ICA:    这里指定z的CDF是sigmoid,这个可以自己选择,只要不是高斯分布就行 | ||

| reinforcement learning | 强化学习 | In the reinforcement learning framework, we will instead provide our algorithms only a reward function, which indicates to the learning agent when it is doing well, and when it is doing poorly. |

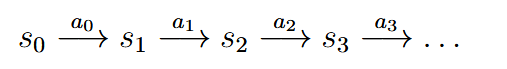

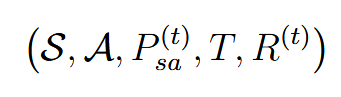

| markov decision process (MDP) | 马尔可夫决策过程 | |

| ||

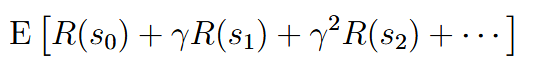

最终优化目标:最大化奖励和的期望值 | ||

| policy | 决策 |  |

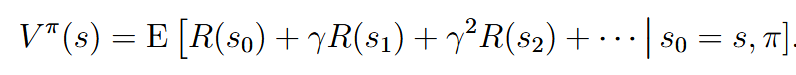

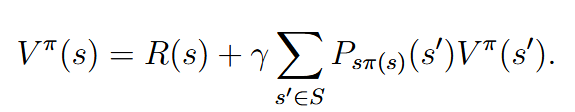

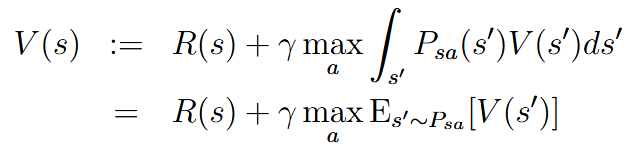

| Bellman equations | 贝尔曼等式 | 如果policy  这里是一个recursive function,第一个是intermediate reward,是初始阶段获得的奖励,后面就是一个递归(后面这项其实是一个期望,就是p(x) * ) 这个贝尔曼等式用于解决有限状态(要求决策固定)的时候十分好用 |

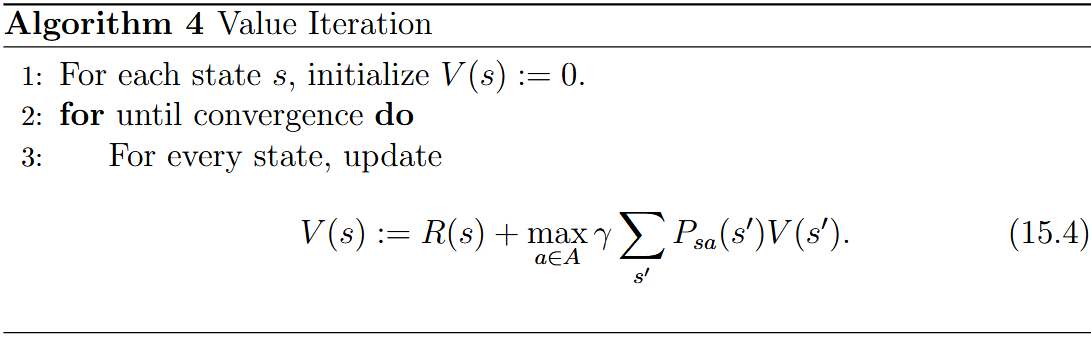

| value iteration | 价值迭代 |  (有限状态与action) (有限状态与action)synchronous update:每次一个loop之后再全部更新 asynchronous update:每次一个V都更新,异步 |

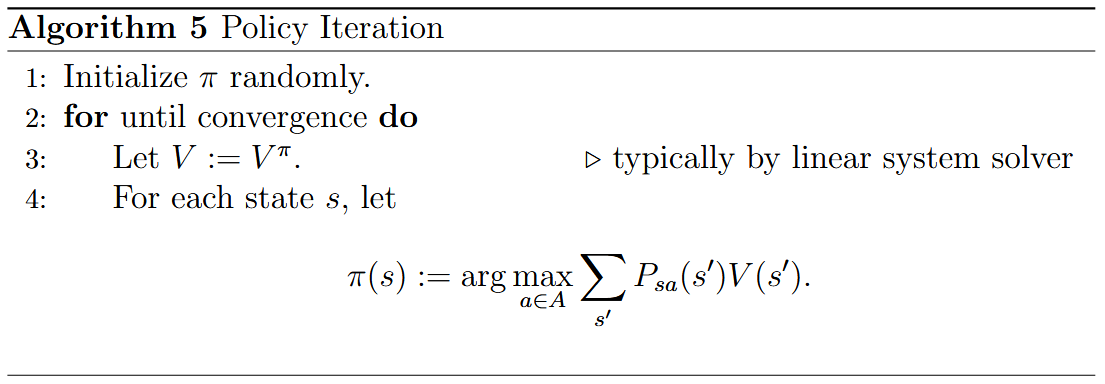

| policy iteration | 策略迭代 |  |

| ||

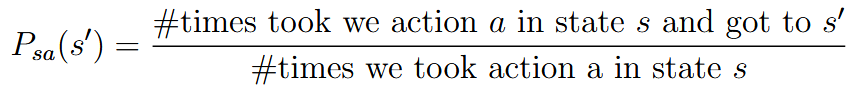

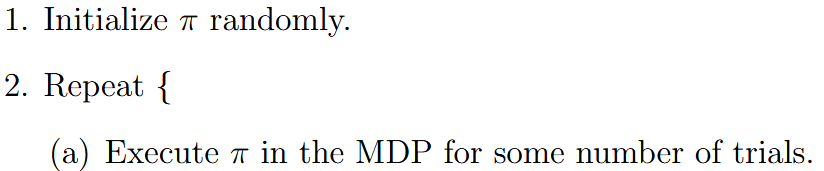

learning the MDP  | ||

| Continuous state MDPs | 连续状态马尔科夫决策过程 | 上面都的情况state都是discrete,现在讨论连续的,意思就是state就infinite个 |

| discretization | 离散化 | 用一些离散值来表示一个连续值 有两个缺点: 1.  并不够平滑 并不够平滑2. curse of dimensionality 维度诅咒。For example, for |

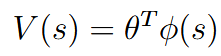

| value function approximation | 值函数近似 | 近似这个函数 |

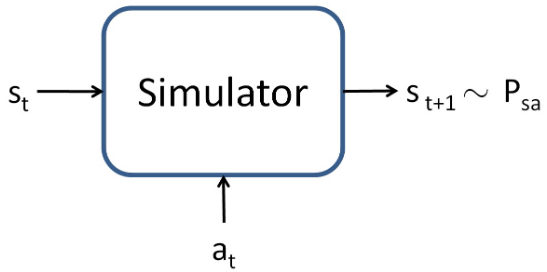

we assume we have a model, or simulator 这个simulator可以有多种形式。比如使用物理模拟器、多跑几个trial得到distribution计算,或者就是自定义一个模型(神经网balalala) | ||

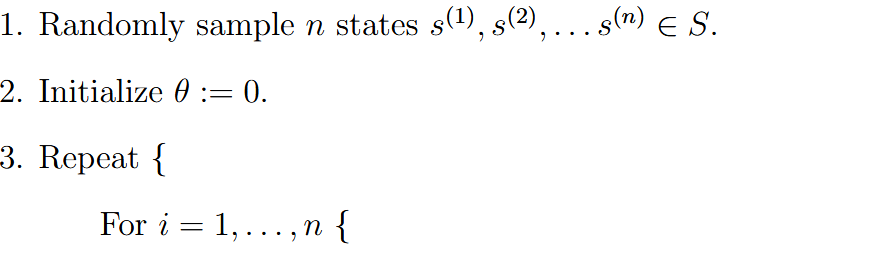

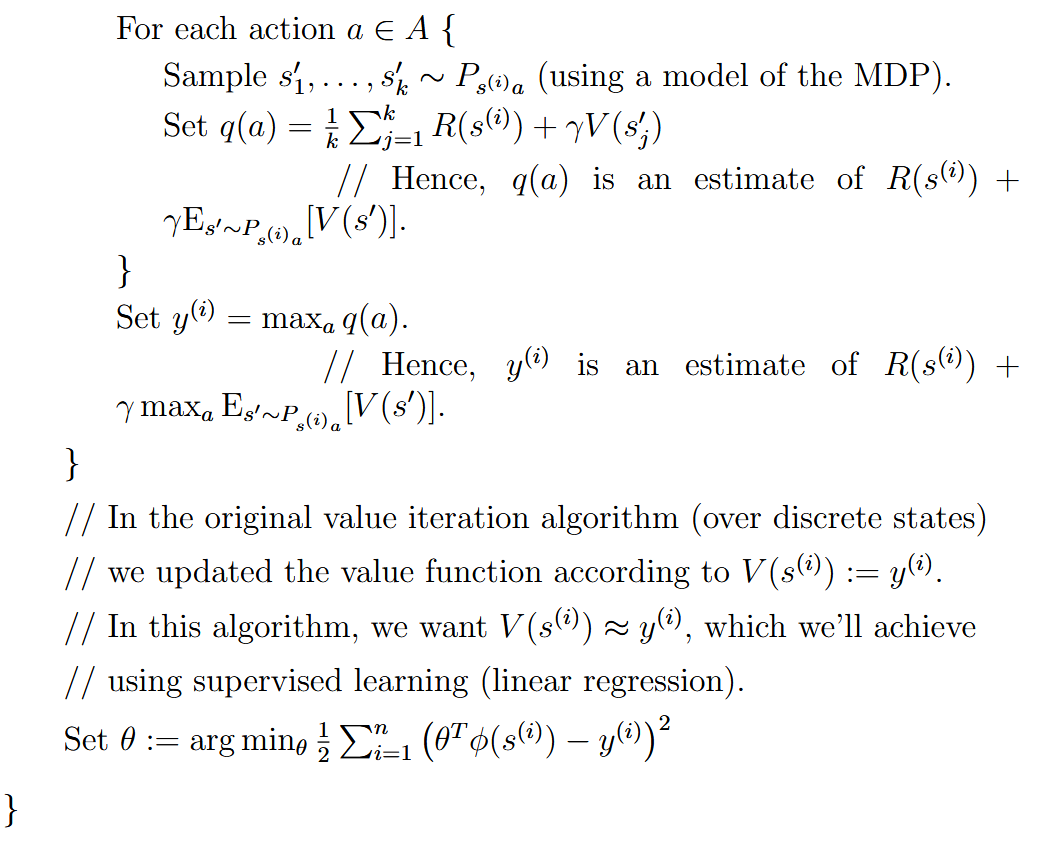

| Fitted value iteration | 拟合值迭代 | 假设state连续,但是action很少并且离散 For continuous state:  (就是从state空间里面取样) (就是从state空间里面取样)    这里也是采样k个,因为是连续的有无数个,所以只采样k个进行近似 这里也是采样k个,因为是连续的有无数个,所以只采样k个进行近似 |

| finite horizon MDP | 有限视野MDP |  取消了discount factor,加入了time factor。 And this is general for both discrete and concrete states and actions |